Then there’s the question of umwelt. Many animals use scent to convey information, some use electrical fields, others stridulate (make noise by rubbing body parts together like a cricket), and some create seismic waves.

While technology may enable humans to capture these more unexpected communication modes, for Yovel the field is still dependent on human interpretation. A lot of the training data for generative AI models is based on datasets labelled by human researchers.

“I’ll write that the bats are fighting over food, but maybe that’s not true,” Yovel explains, “maybe they’re fighting over something that I have no clue about because I am a human. Maybe they have just seen the magnetic field of earth, which some animals can.” Removing the bias of human interpretation, Yovel says, is impossible.

Loading…

Others argue that if enough relevant data is gathered, AI would eliminate that bias. But wild animals don’t tend to co-operate with human data-gathering endeavours, and microphone technology has its limits. “People think we’ll measure everything and put it into AI machines. We are not even close to that,” says Yovel.

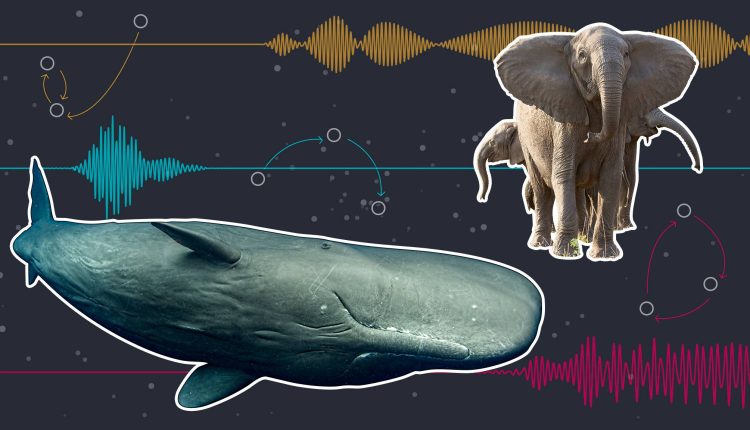

Poole says she has spoken to CETI’s David Gruber about how to collect data on elephants in a non-invasive way: “Sperm whales have robotic fish, could we have robotic egrets or something?”

Yet even if enough of the right data could be run through generative AI, what would humans make of the computerised rumbles and squeaks, or scents and seismic waves?

What is meaningful to an algorithm may still be unintelligible to a human. As one researcher put it, translation is contingent on testing what AI has learnt.

Traditionally, this is done through a playback experiment — a recording of a vocalisation is played to a species to see how they respond. Yet, getting a clear answer on what something means is not always possible.

Yovel describes playing back recordings of different aggressive calls to the bats he studies, and each call being met with a twitch of the ears. “There’s no clear difference in the response and it’s very difficult to come up with an experiment that will measure these differences,” he says.

With generative AI, these playback experiments could now be done using synthesised animal voices, a prospect that thrusts this research into a delicate ethical balance.

In the human world, debates around ethics, boundary setting and AI are fraught, especially when it comes to deepfaked human voices. There is already evidence that conducting playbacks of pre-recorded animal sounds can impact behavioural patterns — Earth Species founder Raskin shares an anecdote about accidentally playing a whale’s name back to itself, to which the whale became distressed.

The unknowns with using synthetic voices are vast. Take humpback whales: the songs sung by humpbacks in one part of the world get picked up and spread until a song that emanates from the coast of Australia can be heard on the other side of the world a couple of seasons later, says Raskin.

“Humans have been passing down culture vocally for maybe 300,000 years. Whales and dolphins have been passing down culture vocally for 34mn,” he explains. If researchers start emitting AI-synthesised whalesong into the mix “we may create like a viral meme that infects a 34mn-year-old culture, which we probably shouldn’t do.”

“The last thing any of us want to do is be in a scenario where we look back and say, like Einstein did, ‘If I had known better I would have never helped with the bomb,’” says Gero.

Read the full article here