Stay informed with free updates

Simply sign up to the Artificial intelligence myFT Digest — delivered directly to your inbox.

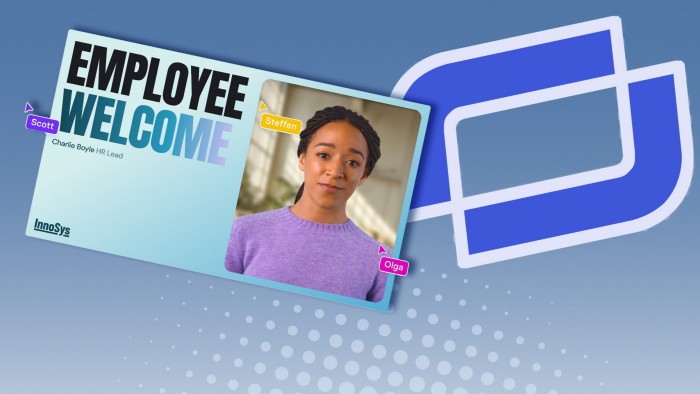

London-based artificial intelligence start-up Synthesia is offering company stock to the human actors it uses to generate digital “avatars” of people, in a radical move for the AI industry that rethinks how workers are compensated for helping to train the cutting-edge technology.

The company, which hit a $2.1bn valuation this year, announced on Tuesday that it is creating a pool of company shares at present worth $1mn through which it will reward actors with equity in return for the use of their likeness and help in crafting new products and features.

Synthesia chief executive Victor Riparbelli said the initiative was a recognition that the actors it hired to train its AI models were also the faces of the company.

“Everyone who works at Synthesia has shares in the company,” Riparbelli said. “Ultimately, the actors are also employees to some extent.”

Synthesia licenses actors’ likenesses to build its hyper-realistic AI avatars for three years. It pays them in cash for about a day’s worth of work. Actors can opt out of their appearance and voice being used in the avatars at any time. The new stock programme will be offered to actors with the most popular avatars.

The move addresses one of the key concerns of people in the creative industries, who have argued they are not being properly paid for the use of their work to train sophisticated AI models and products. Actors and musicians are often offered one-off fees by AI companies, but must then allow them to use that work in perpetuity.

The move comes as leading tech groups such as OpenAI have sought to make multimillion-dollar deals with media organisations and content publishers, while also being sued by other groups over claims they have already breached copyright laws in training their models.

Synthesia’s move to offer shares “recognises the financial and reputational significance for actors licensing their synthetic likeness”, said Henry Ajder, an expert on generative AI and deepfakes, who is not part of the initiative.

The company has previously faced criticism from actors’ union Equity over the creation of so-called “deepfakes” or non-consensual digital clones of real people, when some of its AI-generated avatars were used in 2023 to spread political propaganda in support of the Chinese and Venezuelan governments. Synthesia has since banned these clients from its platform and put in place stricter content-moderation practices.

However, even with better content moderation and safety features, no AI system was entirely immune from potential misuse, warned Ajder.

“Actors need to be aware of this when considering how their synthetic likeness might appear in the world outside of their immediate control and whether the potential risk, however small, is something they are willing to accept,” he added.

Read the full article here