Unlock the Editor’s Digest for free

Roula Khalaf, Editor of the FT, selects her favourite stories in this weekly newsletter.

For anyone wanting to train an LLM on analyst responses for DeepSeek, the Temu of ChatGPTs, this post is a one-stop shop. We’ve grabbed all relevant sellside emails in our inbox and copy-pasted them with minimal intervention.

DeepSeek is a two-years-old, Hangzhou-based spinout of a Zhejiang University company that used machine learning to trade equities. Its stated goal is to make an artificial general intelligence for the fun of it, not for the money. There’s a good interview on ChinaTalk with founder Liang Wenfeng, and mainFT has just published this great overview from our colleagues Eleanor Olcott and Zijing Wu.

Mizuho’s Jordan Rochester takes up the story . . .

[O]n Jan 20, [DeepSeek] released an open source model (DeepSeek-R1) that beats the industry’s leading models on some math and reasoning benchmarks including capability, cost, openness etc. Deepseek app has topped the free APP download rankings in Apple’s app stores in China and the United States, surpassing ChatGPT in the U.S. download list.

What really stood out? DeepSeek said it took 2 months and less than $6m to develop the model – building on already existing technology and leveraging existing models. In comparison, Open AI is spending more than $5 billion a year. Apparently DeepSeek bought 10,000 NVIDIA chips whereas Hyperscalers have bought many multiples of this figure. It fundamentally breaks the AI Capex narrative if true.

Sounds bad, but why? Here’s Jefferies’ Graham Hunt etc al:

With DeepSeek delivering performance comparable to GPT-40 for a fraction of the computing power, there are potential negative implications for the builders, as pressure on Al players to justify ever increasing capex plans could ultimately lead to a lower trajectory for data center revenue and profit growth.

The DeepSeek R1 model is free to play with here, and does all the usual stuff like summarising research papers in iambic pentameter and getting logic problems wrong. The R1-Zero model, DeepSeek says, was trained entirely without supervised fine tuning.

Here’s Damindu Jayaweera and team at Peel Hunt with more detail.

Firstly, it was trained in under 3 million GPU hours, which equates to just over $5m training cost. For context, analysts estimate Meta’s last major AI model cost $60-70m to train. Secondly, we have seen people running the full DeepSeek model on commodity Mac hardware in a usable manner, confirming its inferencing efficiency (using as opposed to training). We believe it will not be long before we see Raspberry Pi units running cutdown versions of DeepSeek. This efficiency translates into hosted versions of this model costing just 5% of the equivalent OpenAI price. Lastly, it is being released under the MIT License, a permissive software license that allows near-unlimited freedoms, including modifying it for proprietary commercial use

Deepseek’s not an unanticipated threat to the OpenAI Industrial Complex. Even The Economist had spotted it months ago, and industry mags like SemiAnalysis have been talking for ages about the likelihood of China commoditising AI.

That might be what’s happening here, or might not. Here’s Joshua Meyers, a specialist sales person at JPMorgan:

It’s unclear to what extent DeepSeek is leveraging High-Flyer’s ~50k hopper GPUs (similar in size to the cluster on which OpenAI is believed to be training GPT-5), but what seems liklely is that they’re dramatically reducing costs (inference costs for their V2 model, for example, are claimed to be 1/7 that of GPT-4 Turbo). Their subversive (though not new) claim – that started to hit the US AI names this week – is that “more investments do not equal more innovation.” Liang: “Right now I don’t see any new approaches, but big firms do not have a clear upper hand. Big firms have existing customers, but their cash-flow businesses are also their burden, and this makes them vulnerable to disruption at any time.” And when asked about the fact that GPT5 has still not been released: “OpenAI is not a god, they won’t necessarily always be at the forefront.”

Best that no-one tells Altman that. Back to Mizuho:

Why this comes at a painful moment? This is happening after we just saw a Texas Hold’em ‘All In” push of the chips with respect to the Stargate Announcement (~$500B by 2028E) and Meta taking up CAPEX officially to the range of $60-$65B to scale up Llama and of course MSFT’s $80B announcement…..The markets were literally trying to model just Stargate’s stated demand for ~2mln Unis from NVDA when their total production is only 6mn…..(Nvidia’s European trading is down 9% this morning, Softbank was down 7%). Markets are now wondering if this is a AI bubble popping moment for markets or not (i.e. a dot-com bubble for Cisco). Nvidia Is the largest individual company weight of S&P500 at 7%.

And Jefferies again.

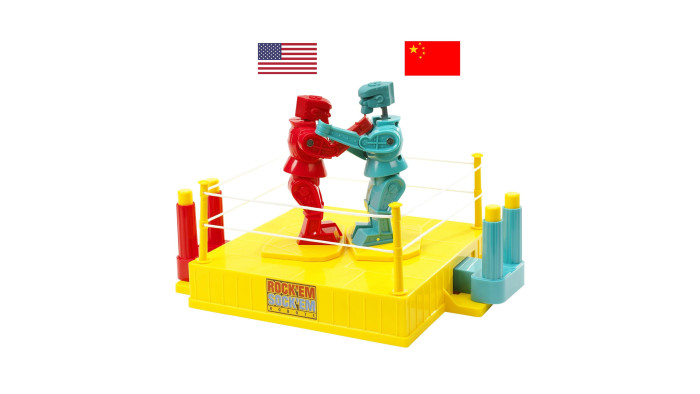

1) We see at least two potential industry strategies. The emergence of more efficient training models out of China, which have been driven to innovate due to chip supply constraints, is likely to further intensify the race for AI dominance between the US and China. The key question for the data center builders, is whether it continues to be a “Build at all Costs” strategy with accelerated model improvements, or whether focus now shifts towards higher capital efficiency, putting pressure on power demand and capex budgets from the major AI players. Near term the market will assume the latter.

2) Derating risk near term, earnings less impacted. Although data center exposed names are vulnerable to derating on sentiment, there is no immediate impact on earnings for our coverage. Any changes to capex plans apply with a lag effect given duration (>12M) and exposure in orderbooks (~10% for HOT). We see limited risk of alterations or cancellations to existing orders and expect at this stage a shift in expectations to higher ROI on existing investments driven by more efficient models. Overall, we remain bullish on the sector where scale leaders benefit from a widening moat and higher pricing power.

Though it’s the Chinese, so people are suspicious. Here’s Citi’s Atif Malik:

While DeepSeek’s achievement could be groundbreaking, we question the notion that its feats were done without the use of advanced GPUs to fine tune it and/or build the underlying LLMs the final model is based on through the Distillation technique. While the dominance of the US companies on the most advanced AI models could be potentially challenged, that said, we estimate that in an inevitably more restrictive environment, US’ access to more advanced chips is an advantage. Thus, we don’t expect leading AI companies would move away from more advanced GPUs which provide more attractive $/TFLOPs at scale. We see the recent AI capex announcements like Stargate as a nod to the need for advanced chips.

It’s spooky stuff for the Mag7’s ROI, of course, but is that a good reason for a wider market selloff?

Cheap Chinese AI means more productivity benefits, lower build costs and an acceleration towards the Andreesen Theory of Cornucopia so maybe . . . good news in the long run? JPMorgan’s Meyers again:

This strikes me not about the end of scaling or about there not being a need for more compute, or that the one who puts in the most capital won’t still win (remember, the other big thing that happened yesterday was that Mark Zuckerberg boosted AI capex materially). Rather, it seems to be about export bans forcing competitors across the Pacific to drive efficiency: “DeepSeek V2 was able to achieve incredible training efficiency with better model performance than other open models at 1/5th the compute of Meta’s Llama 3 70B. For those keeping track, DeepSeek V2 training required 1/20th the flops of GPT-4 while not being so far off in performance.” If DeepSeek can reduce the cost of inference, then others will have to as well, and demand will hopefully more than make up for that over time.

That’s also the view of semis analyst Tetsuya Wadaki at Morgan Stanley, the most AI-enthusiastic of the big banks.

We have not confirmed the veracity of these reports, but if they are accurate, and advanced LLM are indeed able to be developed for a fraction of previous investment, we could see generative AI run eventually on smaller and smaller computers (downsizing from supercomputers to workstations, office computers, and finally personal computers) and the SPE industry could benefit from the accompanying increase in demand for related products (chips and SPE) as demand for generative AI spreads.

And Peel Hunt again:

We believe the impact of those advantages will be twofold. In the medium to longer term, we expect LLM infrastructure to go the way of the telco infrastructure and become a ‘commodity technology’. The financial impact on those deploying AI capex today depends on regulatory interference – which had a major impact on Telcos. If we think of AI as another ‘tech infrastructure layer’, like the internet, the mobile, and the cloud, in theory the beneficiaries should be companies that leverage that infrastructure. While we think of Amazon, Google, and Microsoft as cloud infrastructure, this emerged out of the need to support their existing business models: e-commerce, advertising and information-worker software. The LLM infrastructure is different in that, like the railroads and telco infrastructure, these are being built ahead of true product/market fit.

We’ll keep adding to this post as the emails keep landing.

Further reading:

— Chinese start-ups such as DeepSeek are challenging global AI giants (FT)

— How small Chinese AI start-up DeepSeek shocked Silicon Valley (FT)

Read the full article here